An Introduction to Data Collection: Pulling OpenAQ Data from AWS S3 using AWS Athena.

Johnathan Padilla, Thomas Kidd, and Hadrien Picq are fellows in the TechSoup and ParsonsTKO Summer 2020 Data Strategy Mentorship Program, a select group of upcoming data analysts and scientists to learn more about what the social impact and non-profit analytics sectors look like from industry professionals.

To highlight this week’s theme on data collection, the team sought regulatory air quality data from various sources (specifically, raw measurements of fine particles: PM2.5). Each fellow explored and detailed the process extracting data from the following sources:

- OpenAQ’s REST API and Python wrapper;

- OpenAQ’s AWS S3 Bucket;

- The U.S. Environmental Protection Agency’s REST API AirNow.

Creating an AWS Account

First, you need to set up an AWS account so you can access Athena. Go to Amazon Web Services and click the “Create an AWS Account” in the top right of the page. It will ask for your credit card information but don’t worry it won’t charge you as long as you stay within the basic plan limits.

Creating a Data Bucket

From your AWS management console navigate to S3 (under Storage). Now we can create a bucket in which we can operate. Click the “Create Bucket” button and fill out the bucket name field. Make sure the bucket name is unique and DNS compatible. Next, make sure the region is set to US East (N. Virginia) and then press “next” until you can press “create”. Leave all settings for the bucket creation at their default. You should now have a functioning bucket

Query the OpenAQ Data

In order to query the data, you must first construct a table in which the data can be populated. I used a CREATE EXTERNAL TABLE function made with specifications of the OpenAQ data.

CREATE EXTERNAL TABLE `openaq`(

`date` struct<utc:string,local:string> COMMENT 'from deserializer',

`parameter` string COMMENT 'from deserializer',

`location` string COMMENT 'from deserializer',

`value` float COMMENT 'from deserializer',

`unit` string COMMENT 'from deserializer',

`city` string COMMENT 'from deserializer',

`attribution` array<struct<name:string,url:string>> COMMENT 'from deserializer',

`averagingperiod` struct<unit:string,value:float> COMMENT 'from deserializer',

`coordinates` struct<latitude:float,longitude:float> COMMENT 'from deserializer',

`country` string COMMENT 'from deserializer',

`sourcename` string COMMENT 'from deserializer',

`sourcetype` string COMMENT 'from deserializer',

`mobile` string COMMENT 'from deserializer')

ROW FORMAT SERDE

'org.openx.data.jsonserde.JsonSerDe'

STORED AS INPUTFORMAT

'org.apache.hadoop.mapred.TextInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION

's3://openaq-fetches/realtime-gzipped'

TBLPROPERTIES (

'transient_lastDdlTime'='1518373755')This table is now ready to be populated with data from OpenAQ. You only need to run this function once inside of the bucket you created. To populate the table you can write a SQL query like the one below.

select *

from openaq

WHERE date.utc

BETWEEN '2018-01-01'

AND '2018-01-29'

AND parameter = 'pm25'

AND value >= 0

AND location = 'Rockville'It should be noted that location does not necessarily correspond to a city and the list of valid locations can be found here. After running this query you should have a dataset that is ready for download. In the top right corner of the results box, there is a file icon that will download the data in CSV format for you.

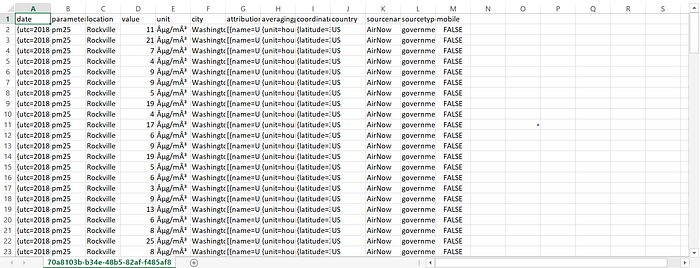

Results

You should have a CSV file like the one below. I recommend that you only query a month's worth of data at a time as the queries are already slow.

This dataset is now good to load into a Jupyter Notebook for cleaning and analysis.

Conclusion

This method for pulling data can be repurposed for other sources as long as you have a valid bucket location to pull from and some knowledge of the data types that exist there. The next steps would be to clean and prep the data for analysis so you can start analyzing the effects of COVID-19 on air quality. Look out for the next installment in this series where we will be talking about data cleaning methods and best practices.

Credit

https://gist.github.com/jflasher/573525aff9a5d8a966e5718272ceb25a